But how can we determine if this rogue AP and especially rogue wireless clients (WLAN card into a back office server) are inside CDE? By signal level? But Kismet shows this information only for APs (not for clients) :(

I’ve already answered the question on

Informzaschita web site, but let’s repeat.

>how could I know that the wireless access point with enabled encryption is a part of our local network?

>Whre can I find information about access point search by triangulation method, and what kind of antennais the best?

Parabolic and Yagi- antenna for 2,4 diapason are rather bulky, so panel ones are more comfortable to use, in spite of worse directivity and sensitivity to reflected signal.>But if it’s really rightly configured access point WPA2+hidden+MAC filter. It takes long time to find until there’s no activity.

Any AP connected to network, “signals” anyway: - sends beacon (even if ESSID is empty) - relays broadcasts and multicast with source MAC addresses in clear text

Its’ difficult to image a network without broadcast requests. And I wrote above how to detect access point location by these requests.

>How to detect clients that connect to external access points

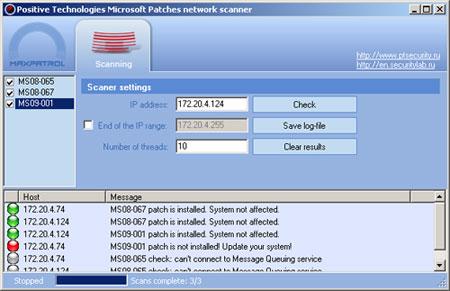

Clients that are authorized to connect to “external” access points, can be detected by active security assessment mechanisms. For example, there are three mechanisms in MaxPatrol that helps to resolve the problem:By monitoring wireless network, but you need to list “your ” MAC addresses beforehand. It’s possible to do by active (see above) or passive (see below) mechanisms.

>How can I understand that this is my users? Something about it is written

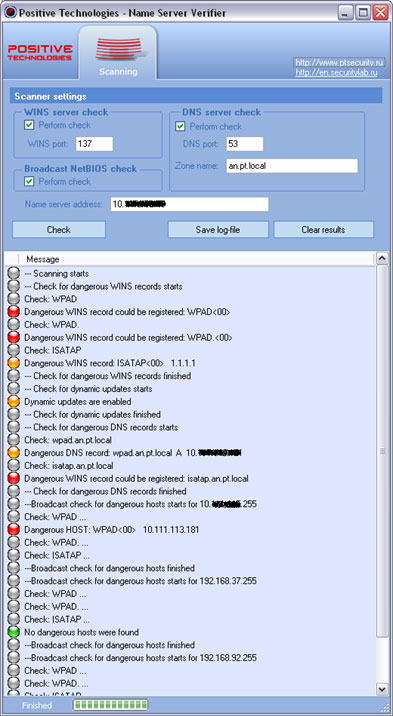

here (Russian).But in any case, a workstation (especially under Windows) sends a lot of interesting traffic which allows to define network membership. This is both NetBIOS Broadcast and

WPAD requests, and also DHCP requests which contain host and domain name...But one question is still open – how to send this kind of traffic? Here

Gnivirdraw can help us.>Active scanners don’t help us!!! Of course, sometimes to run along with laptop is useful :). But scanners can help to do the following:

- fingerprint in pentest mode of network devices (including AP).

- inventory of wireless client configuration (MAC addresses, lists of networks)

- analysis of access point configuration

- analysis of wireless device logs in order to find “bad” events